Cookie Settings

We use cookies to operate this website, improve usability, personalize your experience and improve our marketing. Your privacy is important to us. Privacy Policy.

December 12, 20246 min read

Navigating 2025’s Cybersecurity Challenges: Practical Insights for Data Center Operators

Share

In 2024, significant cybersecurity challenges, like the National Public Data (NPD) breach, exposed the sensitive information of millions, including Social Security numbers. This event emphasizes the growing complexity of cyber threats and the critical need for data center operators to remain vigilant.

With these increasingly intricate threats, proactive measures are essential to safeguard critical infrastructure. This involves understanding emerging risks, crafting comprehensive defense strategies, and carefully evaluating the role of cloud-based service providers in security planning.

This blog will equip you with the insights needed to:

Identify and navigate emerging cybersecurity threats.

Implement practical strategies to reduce risk.

Weigh the pros and cons of outsourcing to cloud-based service providers.

By staying informed and adopting a proactive stance, your data center can remain secure, resilient, and ready for the future.

Understanding the Threat Landscape

With ever-evolving cybersecurity, data centers are integral to the digital infrastructure for supporting businesses, governments, and day-to-day life. Housing vast amounts of sensitive information and enabling critical operations, they’re prime targets for malicious actors seeking power, profit, or disruption.

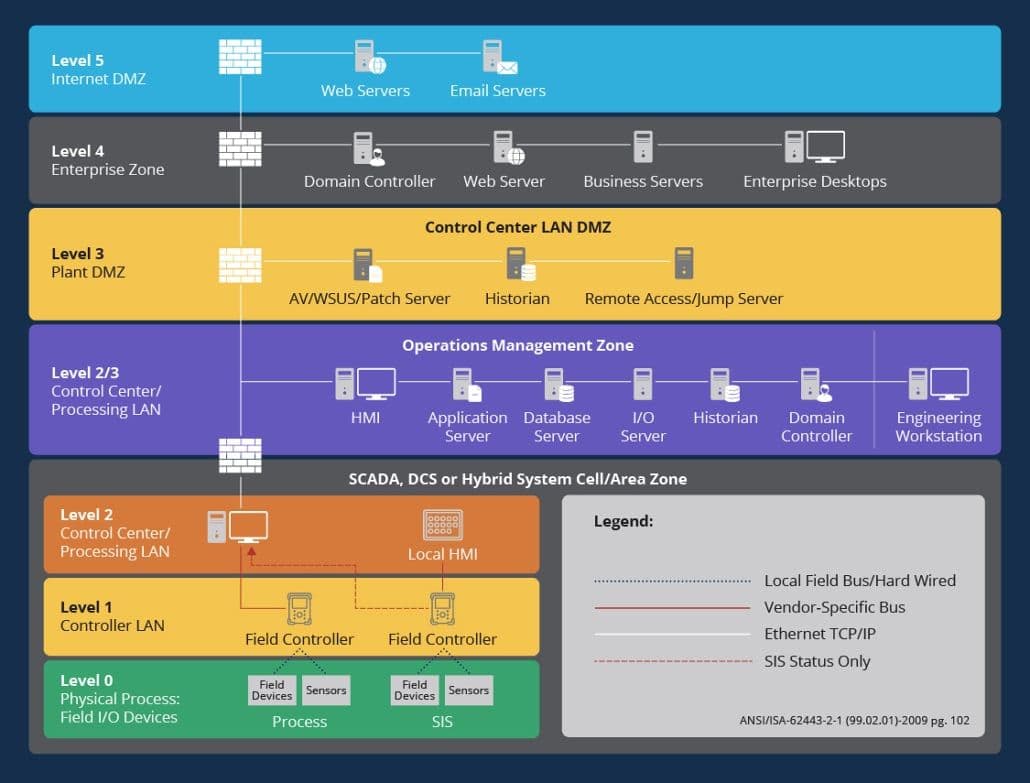

Why the IT/OT Boundary is the New Frontline

Traditionally, IT (Information Technology) and OT (Operational Technology) systems in industrial environments operated as isolated entities, with little interaction or overlap. IT systems manage data, communications, and administrative functions, while OT systems control physical processes and machinery. However, the push to interconnect these systems has grown in pursuit of operational efficiency and innovation.

This interconnectivity, while advantageous, introduces risks. The boundary between IT and OT has become a prime target for cyber attackers. At this critical juncture, attackers often infiltrate IT systems and pivot into OT environments—systems essential to industrial operations and infrastructure stability. These incursions are not random or hasty. They’re calculated, long-term operations designed to establish a foothold for future exploitation.

Threat actors, particularly those with significant resources, target this boundary for several reasons. IT systems often serve as an easier point of entry due to their connectivity, making them a springboard into more sensitive OT systems. The motivation isn’t always immediate disruption. In many cases, these attackers are silently pre-staging their access to prepare for larger, more impactful events.

For data centers, the integration of IT and OT environments offers a practical yet advanced approach to improving operations. The industry, however, has been cautious to adopt it. Traditionally, these systems are kept separate to reduce perceived risks, with OT services, like building management systems, isolated from IT networks. Yet, this separation can limit oversight and the advanced management and data processing capabilities provided by cloud and other IT-hosted services.

Interconnecting these systems brings clear advantages, such as streamlined management and improved functionality. Still, concerns about increased vulnerabilities persist, as a compromised IT system could serve as a launchpad for deeper infiltration into OT networks, disrupting critical operations. By prioritizing robust security measures, organizations can address these risks effectively, making the IT/OT boundary a secure and practical connection point for innovation.

Hacking for Profit or Power

Cybercrime has developed into a sophisticated marketplace, where attackers no longer need deep technical skills to launch devastating attacks. Instead, they can outsource nearly every step of an operation through "cybercrime-as-a-service" models.

Services such as ransomware marketplaces and initial access brokers enable even non-technical individuals to orchestrate complex breaches. For example, attackers can hire specialists to handle reconnaissance, exploitation, or even money laundering, often with service-level agreements (SLAs) and credits for “failed” attempts.

This commoditization of hacking has drastically lowered the barrier to entry, increasing both opportunistic and deliberate attacks. Opportunistic attackers cast a wide net, scanning for exposed vulnerabilities or misconfigured systems, while deliberate actors invest time and resources to penetrate specific targets.

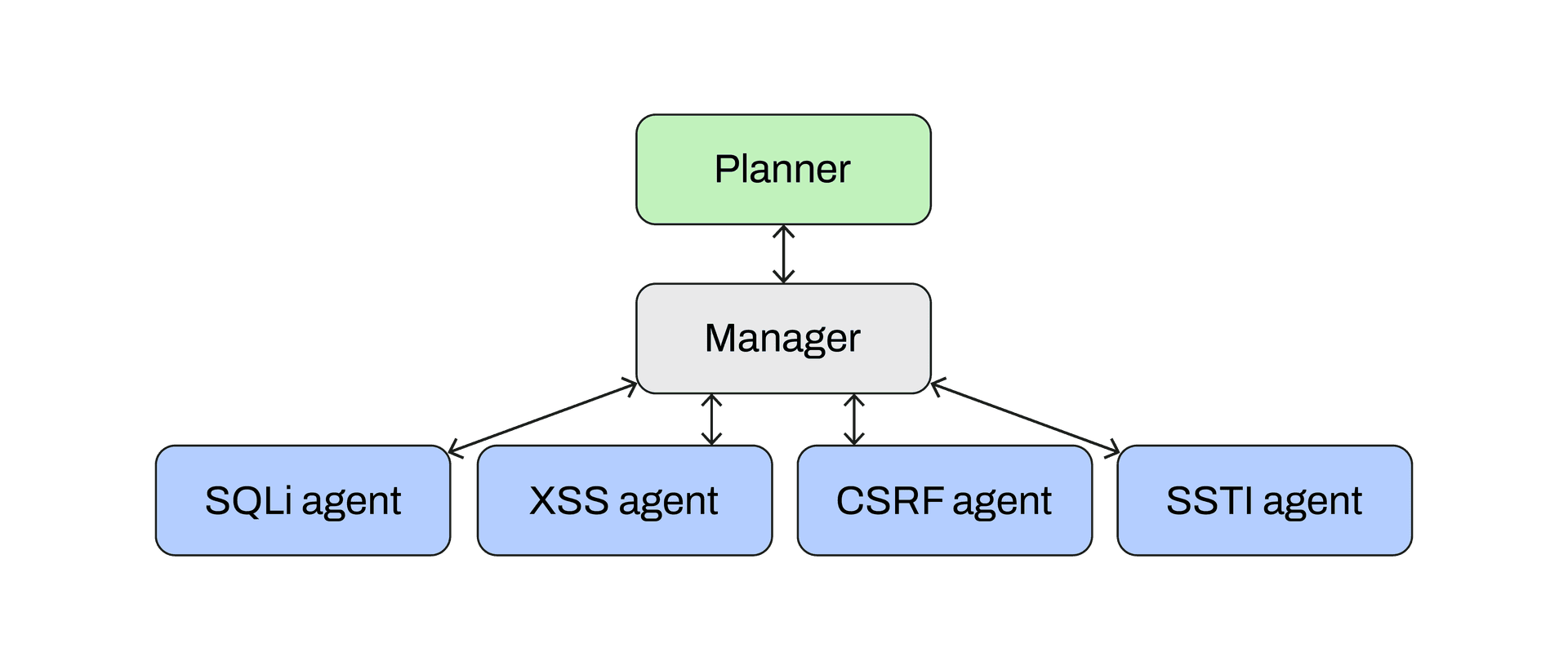

Artificial Intelligence (AI) adds a tool for cybercriminals. Large Language Models (LLMs) can generate flawless phishing emails, mimicking natural language patterns, in any language native to the targets, to bypass traditional red flags like grammar errors. Beyond phishing, AI can automate reconnaissance, build exploit code, and even adapt in real time to a defender’s countermeasures. Attackers are deploying their own AI agents and models with focused tasks and defined lines of management. This ensemble method of deployment enables greater attack system "resiliency", adapting to each frontline agents’ experience. Increased effects on the end objective results as manager and planner agents select the most appropriate tools for current and future operations.

The growing access to sophisticated tools and services creates a critical challenge for data centers. Whether financial gain or long-term disruption motivates attackers, their ability to operate at scale means no organization is immune.

Protecting your infrastructure requires not only robust defenses but also a clear understanding of the evolving tactics used by these adversaries.

The Top Threats for Data Centers in 2025

As cyber threats grow more sophisticated, data centers face a unique set of risks that threaten both their operations and their customers’ trust. Understanding these threats is the first step to building a resilient defense.

1. Credential Misuse

Credential misuse remains the top attack vector, with compromised passwords acting as the gateway for most breaches. Attackers use techniques like credential stuffing or phishing to gain access, then “live off the land” by leveraging existing tools and permissions within the system. This tactic reduces their detectability and maximizes the damage they can inflict.

According to a recent Uptime Institute survey, 75% of data center operators reported experiencing cybersecurity incidents in the last three years, with credential misuse consistently identified as a primary attack vector.

2. Lite-Phishing and OAuth Trojans

Traditional phishing attacks have evolved into “lite-phishing,” which are like stealthy exploits. Lite-phishing omits suspicious links and attachments, instead relying on well-crafted messages to bait responses.

Similarly, OAuth Trojans disguise themselves as legitimate applications, tricking users into granting dangerous permissions. Once they’re authorized, these malicious apps can impersonate users, allow access to critical systems, and enable lateral movement throughout and across environments, posing a significant threat with broad integrations.

3. Supply Chain Risks

The growing interconnectedness of supply chains creates new entry points for attackers. By targeting smaller, less-secured vendors, cybercriminals can infiltrate larger organizations through backdoor vulnerabilities. For example, attackers might exploit a vendor’s poorly secured identity or workstation to gain access to a data center’s infrastructure, cascading risk throughout the network.

With 43% of data centers allowing third-party access to their networks, as highlighted in the security survey, the risks posed by supply chain vulnerabilities are increasingly pressing. A single weak link in the vendor chain can expose an entire operation to exploitation.

A Data Center Nightmare

Picture this: A data center partners with a third-party vendor to handle routine system maintenance. One day, an engineer at the vendor receives an AI-crafted, error-free phishing email disguised as a routine service request. The email lacks suspicious links or attachments, making it indistinguishable from legitimate correspondence. Believing it’s genuine, the engineer responds and is connected to a very helpful support engineer who passes a link to a new support web application that’s required. This “new tool” is opened, and the initial login and acceptance windows are quickly dispatched.

What happens next? The attacker uses this OAuth Trojan and the permissions that were willingly granted to impersonate the engineer. The stolen identity is used to gain initial access to the vendor’s network. From there, they move laterally, uncovering a direct pathway into the data center’s operational systems. Within days, the attacker manipulates critical cooling systems, overheating servers and causing widespread outages.

The fallout results in hardware failures, customer disruptions, and weeks of operational downtime. Worse, the incident severely impacts customer trust and exposes gaps in vendor oversight.

Practical Steps to Reduce Risk

Recognizing threats is one thing. Building a defense to match them is another. For data center operators, a reactive stance isn’t enough, so proactive measures must be woven into every layer of the operation. The goal is resilience, not perfection.

Here’s how you can tackle the most pressing issues with practical strategies.

Segmentation

Think of your network like a ship. Without bulkheads to contain flooding, a breach in one area can sink the entire vessel. Segmentation is the bulkhead of your cybersecurity strategy—it isolates critical systems, making it harder for attackers to move laterally and wreak havoc.

Traditional network segmentation remains valuable, creating detonation zones where breaches can be monitored and contained. The Purdue Model offers a structured approach to controlling traffic between levels (North-South) and within them (East-West).

But today, we can take it further with micro-segmentation. Imagine putting each device into its own private VLAN. If one device is compromised, it’s like locking the door to a single room rather than the entire building.

Identity segmentation plays a complementary role. If one user’s identity spans both high-risk activities, like accessing operational systems, and low-risk ones, like checking email, a breach of that identity becomes catastrophic. Assigning distinct identities for different functions limits the blast radius of any compromise.

Identity Management

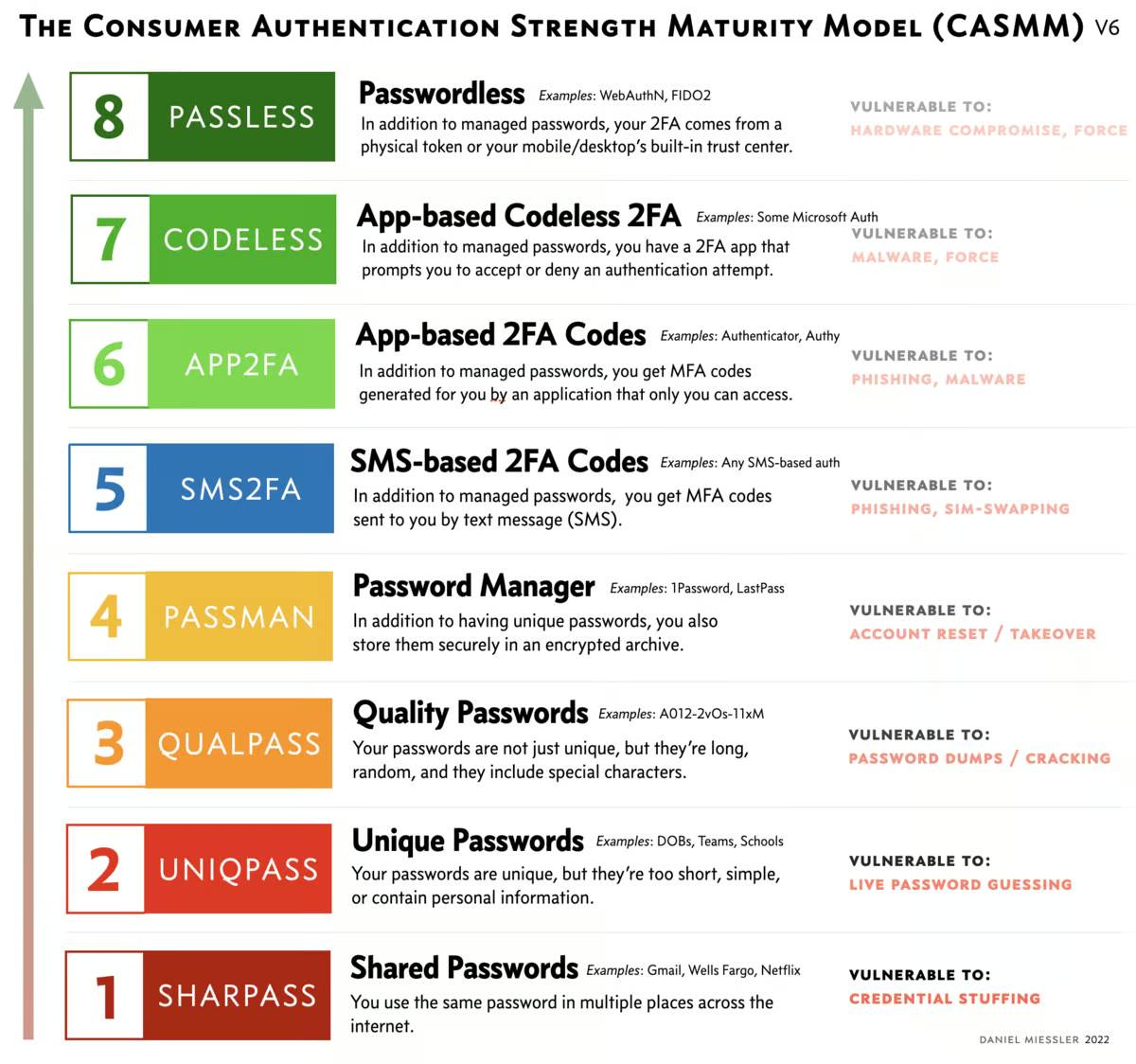

“Identity is the new perimeter” is more than a catchy phrase—it’s the reality of modern cybersecurity. Attackers don’t break in. They log in. So, the strength of your authentication measures often determines the strength of your defenses.

Multi-factor authentication (MFA) is the backbone of identity protection, but it’s not one-size-fits-all. For systems where MFA isn’t an option, deploying a bastion—a secure checkpoint where access is monitored and enforced—adds a critical layer of security. Think of it as a toll booth that checks every vehicle before it gets on the highway.

The Consumer Authentication Strength Maturity Model (CASMM) offers a helpful framework for gauging and improving your authentication practices. Moving from shared passwords (the weakest layer) to hardware tokens like YubiKeys (the strongest) is more than an upgrade. It’s a necessity since attackers outsource phishing like it’s a gig economy.

Traffic Monitoring

Traffic monitoring is your early warning system. It detects anomalies before they escalate into full-scale breaches. For data centers, where the stakes are high, proactive monitoring is critical to identifying threats that traditional defenses may miss.

Every network has a rhythm with consistent traffic patterns that reflect normal operations. Establishing this baseline is the foundation of effective traffic monitoring. Deviations from this norm, such as unexpected spikes in data transfers or irregular access attempts, often signal malicious activity.

A recent security survey revealed that 62% of data center operators struggle with identifying and responding to anomalous traffic patterns due to insufficient resources or outdated tools.

Beyond simple anomaly detection, protocol-aware tools analyze traffic patterns and content to uncover subtle signs of malicious behavior. These tools can differentiate between routine activities and potential threats, such as unusual data flows indicative of lateral movement or exfiltration attempts.

Detection is only half the battle. Real-time response mechanisms ensure that once an anomaly is flagged, appropriate actions are taken immediately. This might include isolating affected systems, notifying security teams, or triggering automated countermeasures.

Operators using real-time traffic monitoring reduced response times by 40% compared to those relying solely on periodic manual checks. This highlights the value of continuous monitoring for mitigating threats.

Effective monitoring also involves understanding the context of anomalies. Without the tools to analyze these nuances, organizations risk overreacting or, worse, missing genuine threats. Investing in AI-driven monitoring systems capable of contextualizing anomalies would detect deviations and provide actionable insights into their cause, allowing for targeted and effective responses.

Vulnerability Prioritization

Fixing every vulnerability isn’t feasible, nor is it wise. The Common Vulnerability Scoring System (CVSS) is often treated as gospel, but it doesn’t account for the specific context of your environment. A vulnerability with a high CVSS score might pose little risk if it’s buried deep in a system with no external exposure.

Threat-informed models, like the Exploit Prediction Scoring System (EPSS), offer a more nuanced approach. By analyzing hundreds of data points, these tools predict the likelihood of exploitation, helping you prioritize what matters most. And when a patch isn’t an option? Compensating controls—like restricting network access or tightening permissions—can buy you the time needed to implement a more permanent solution.

Threat Modeling

Threat modeling is where strategy meets execution. By mapping out how your systems interact, what data flows between them, and where vulnerabilities lie, you can anticipate attacks before they happen. Frameworks like STRIDE or its expanded version, STRIDE-LM, provide a structured way to identify threats like spoofing, tampering, or lateral movement.

This isn’t a one-time exercise. Threat modeling should evolve with your systems, offering an up-to-date blueprint for addressing the most pressing risks. It’s the difference between guessing what an attack may target and knowing what systems would impact you most, how those impacts could materialize, and strategically reinforcing your defensive posture.

A Smarter Foundation

Reducing risk is about building layers of defense that reinforce each other. Segmentation isolates attacks. Identity management limits their scope. Traffic monitoring alerts you to anomalies, while vulnerability prioritization and threat modeling keep your efforts focused where they matter most.

This makes your data center secure and resilient—ready to withstand the inevitable.

Pros and Cons of Remote Cloud-Based Service Providers

As data center operators face increasing pressure to adapt to evolving cyber threats, many turn to remote cloud-based service providers to offload certain responsibilities. These partnerships can bring significant advantages, but they also introduce unique risks. Understanding both sides of the equation is critical to making informed decisions that align with your operational goals.

The Double-Edged Sword of Outsourcing

When you partner with a remote cloud provider, you’re essentially inheriting their risk posture. If their defenses are robust, your systems gain an additional layer of security. But if their approach is lax, that risk becomes your problem. The challenge is visibility—how much insight do you have into their processes, tools, and response capabilities?

For instance, questionnaires and compliance audits are often used to assess vendors, but let’s be honest: these methods are frequently check-the-box exercises. True due diligence requires deeper conversations, clear service-level agreements (SLAs), and ongoing evaluations to ensure alignment.

The Risks of Outsourcing

Inherited Risk: Your provider’s vulnerabilities become your vulnerabilities. If they fall short of securing their systems, the ripple effects can directly impact your operations.

Supply Chain Abstraction: Cloud providers often rely on their suppliers, creating additional layers of risk you may not see or control.

Core Competency Erosion: Outsourcing essential tasks can lead to a gradual loss of in-house expertise, making you more dependent on external partners over time.

Misaligned Priorities: Your goals and those of your provider might not always align. While you focus on reliability and security, they may prioritize cost efficiencies or scalability, potentially leaving gaps in critical areas.

Autonomous AI Controls at Merck: Mission Critical Cooling Optimization

Case Study: Learn more about the journey to full autonomous AI control deployment and the impacts that Virtual Plant Operators are having at Merck's largest manufacturing site.

The Advantages of a Trusted Partnership

When done right, outsourcing can free your team to focus on core operations and strategic goals. It’s more than saving time. It’s about accessing specialized expertise and avoiding the burden of maintaining services that aren’t central to your business.

Efficiency Gains: By offloading non-core tasks, your team can direct its energy toward higher-value activities.

Outcome-Focused Collaboration: A strong partnership can provide thought leadership and insights, helping you innovate and stay ahead of emerging challenges.

Reduced Overhead: Maintaining ancillary services in-house often leads to creeping costs and risks, especially when budgets tighten. Outsourcing can mitigate these challenges, ensuring consistent quality without straining internal resources.

In a 2024 survey, nearly half of operators reported measurable operational improvements after partnering with providers who aligned with their security goals.

Key Considerations for Choosing a Provider

Alignment with Your Goals: Look for providers whose priorities align with your own, particularly in terms of security and long-term reliability.

Transparency and Accountability: Ensure clear SLAs that define not only performance metrics but also their responsibility in the event of a breach.

Collaborative Approach: The best partnerships go beyond transactions—they act as extensions of your team, with a shared commitment to achieving your goals.

Use a decision-making matrix to evaluate providers, balancing their strengths against the specific needs of your operation.

Outsourcing isn’t a silver bullet—it’s a strategic decision that requires careful consideration. For data center operators, the choice comes down to whether the benefits of freeing up resources and accessing external expertise outweigh the risks of dependency and reduced control.

By thoroughly vetting providers and fostering strong partnerships, you can make outsourcing a tool, not a liability.

Conclusion

Cybersecurity is an infinite game. Threats evolve, tools become more sophisticated, and attackers adapt in ways we can’t always predict. But by understanding the landscape, taking proactive measures, and carefully evaluating partnerships, we position ourselves not just to survive but to thrive in this ever-shifting terrain.

Data centers are the backbone of modern infrastructure, and their security is non-negotiable. Whether it’s reinforcing the IT/OT boundary, implementing segmentation, or making strategic outsourcing decisions, every action contributes to a stronger, more resilient foundation. The key is preparedness.

As you move forward, remember that sophisticated security requires creating layers of defense that work in harmony. Ask the right questions, work with the right partners, and stay ahead of the curve. Because in the end, the goal isn’t only to protect what you have—it’s ensuring your operations remain reliable, your reputation intact, and your future secure.

Stay vigilant. Stay resilient. And keep playing the game.

Featured Expert

Learn more about one of our subject matter experts interviewed for this post

Chadwick Thresher

Director of Cyber Enablement

As the Director of Cyber Enablement, Chadwick ensures the ongoing effectiveness and development of Phaidra's management, technical, and business systems to support secure and resilient operations while managing cyber risk. Prior to Phaidra, Chadwick led SpaceX's Cyber Assurance program, where he engaged with customers and regulators while overseeing Governance, Risk, and Compliance efforts across their launch, connectivity, and special programs value streams. Earlier in his career, Chadwick spearheaded security improvements for Microsoft's HoloLens division, consumer device manufacturing, and various internal and external online services. A retired United States Marine and 20 year veteran of in the security and IT fields, Chadwick holds an Executive MBA and a Master's in Supply Chain Management from the University of Washington's Foster School of Business.

Share

Recent Posts

Research | December 03, 2025

What kind of data is needed to safely operationalize predictive AI control? Building on a previous blog, class 3 and 4 data provide the context and simulated future states that power real-world autonomy in industrial AI control systems.

AI

AI | November 04, 2025

Phaidra joins NVIDIA’s Omniverse DSX Blueprint to define the future of energy-efficient, gigawatt-scale AI infrastructure.

Setup | October 06, 2025

AI system outputs can only be as good as the quality of the data inputs. AI systems can fail when data is mislabeled, messy or misleading. Learn about data classifications and what can be done at each stage to get your data ready for AI

Subscribe to our blog

Stay connected with our insightful systems control and AI content.

You can unsubscribe at any time. For more details, review our Privacy Policy page.