Cookie Settings

We use cookies to operate this website, improve usability, personalize your experience and improve our marketing. Your privacy is important to us. Privacy Policy.

October 09, 202410 min read

Hyperscalers: Sustainability Goals Vs. Operational Reality

Share

Hyperscalers—think Amazon, Google, Meta and Microsoft—are the giants behind the digital world. Their vast data centers keep our cloud-based service running smoothly, keeping our connected lives moving. As their influence grows, so does the scrutiny of their environmental impact. With the spotlight on sustainability, these tech titans have made bold promises.

They’ve set ambitious goals like net-zero emissions, running entirely on renewable energy, and even Microsoft’s unique pledge to remove all the carbon it has emitted since its founding by 2050. As impressive as these pledges sound, there’s often a gap between what’s promised and the realities on the ground. Though these promised goals are excellent and well-progressed, the rising demand for artificial intelligence applications and the enormous computing power required make these sustainability ambitions increasingly difficult to achieve.

In this article, you will learn:

How hyperscalers' sustainability commitments compare to their actual practices.

The innovative strategies hyperscalers use to improve energy efficiency.

The regulatory and public pressures urging hyperscalers to align ambition with reality.

Let’s take a closer look at the real story behind the promise and the practice.

Understanding Hyperscalers

What Are Hyperscalers?

Hyperscalers are the powerhouse companies behind today’s digital infrastructure. These giants have built a global network of data centers that power everything from your email to streaming services. What makes them “hyper” is their ability to scale quickly, handle massive workloads, and operate with remarkable efficiency, all on a global level.

These companies continue to grow larger each year and are committing massive amounts of capital expense to securing new land, power and equipment for larger and more complex data center builds. Hyperscalers stand apart not only because of the sheer size of the new data center capacity they have planned but are also investing in the latest cutting-edge technology to allow them to meet global demand in real-time with their fleets of existing data centers around the world. In fact, hyperscalers account for more than half of all cloud services consumed globally.

But they don’t operate alone. Colocation data center providers play a crucial role in supporting hyperscalers in meeting explosive demand. Colocation companies will either rent IT space that’s available within currently built data centers they own or will ‘build-to-suit’ vast new data centers that are pre-leased by a single hyperscale customer. Like hyperscalers, colocation providers design, build and manage their own physical infrastructure—space, power, and cooling.

Companies like AWS, Google Cloud, and Microsoft Azure are setting industry standards for scalability and efficiency, continuously pushing the boundaries of what’s possible in data center operations.

Hyperscalers vs. Colocation and Enterprise Data Centers

Data centers have long since evolved from the “shipping containers with servers” starting point. They come in different forms—hyperscalers, colocation providers, and enterprises—and each provides critical infrastructure.

Enterprise data centers started as a few servers in something as small as a utility closet. Today they may take up full floors of a building or operate in some built-to-suit space, but they are typically smaller and serve the specific needs of a single organization, like a bank or telecommunication company. These organizations must still manage some of their critical IT infrastructure in-house. Due to their legacy status and smaller scale, enterprise data centers tend to be reactive. They rely on human operators to manually adjust systems and respond to changes in demand, making them less adaptable to rapid scaling. Built in an earlier tech era, they are numerous but unable to be dynamic, operating within the constraints of their original design.

Colocation providers, also sometimes known as “retail” data centers, lease space for IT servers to either various companies in a shared facility or a single tenant. These providers own and manage the power, cooling, security and various other facility functions. Having been around for nearly 20 years, colocation providers also offer "build-to-suit" services, designing, building, and operating entire facilities for a single tenant’s needs—often hyperscalers. This model allows companies to expand their infrastructure without the expense and complexity of constructing and managing additional data centers.

Hyperscalers, on the other hand, are globally distributed networks that use advanced software, predictive algorithms, and the latest tech to manage everything from energy consumption to data storage. They both design, build, and own (or operate) their own data centers and contract with colocation providers for additional capacity. This modern, proactive approach combines elements of both enterprise and colocation data centers but scaled to a global level. As a result, hyperscalers can optimize resources and handle demand spikes more efficiently, achieving a level of efficiency and flexibility that’s difficult for other data centers to match.

What Makes Them Different?

Besides their massive scale, hyperscalers typically excel at or at least attempt automation, rapid scalability, and global reach. Unlike enterprise data centers, which typically serve a single region or purpose, hyperscalers can ramp up their operations across the globe in seconds.

Need more storage, processing power, or bandwidth? Hyperscalers can deliver it on demand with precision and speed, thanks to sophisticated algorithms and automation.

Efficiency is also a top priority. By operating on economies of scale that enterprise data centers can’t match, hyperscalers can offer services that maintain high levels of performance and reliability.

Why Hyperscalers Matter

Hyperscalers set the standard for global digital infrastructure. They’re essential to advancements in artificial intelligence, machine learning, edge computing, and 5G.

By providing the infrastructure that handles the immense flow of the world’s data, hyperscalers have the power to drive global standards for energy efficiency, sustainability, and operational best practices. Their products and services are among the most widely used in the world, meaning their data centers process enormous amounts of information. As a result, their operational choices don’t just impact their own businesses—they set benchmarks for the entire tech ecosystem, influencing colocation providers, suppliers and even governments.

In short, hyperscalers have the ability—and the responsibility—to lead the charge toward more sustainable, efficient, and innovative data center operations worldwide.

The Sustainability Commitments of Hyperscalers

Hyperscalers like Amazon, Microsoft, and Google have made ambitious sustainability promises. Amazon aims for net-zero carbon by 2040, Microsoft by 2030, and Google intends to run on 24/7 carbon-free energy by 2030. These commitments show the industry's shift toward sustainable operations.

While the goals are bold, turning them into reality is more complex. Hyperscalers are investing in renewable energy, energy-efficient hardware, and advanced cooling technologies such as liquid and immersion cooling.

However, despite these innovations, many data centers still rely on static control systems that don’t fully leverage AI’s potential for optimizing energy use. These static systems create inefficiencies by reacting too slowly to demand, leaving much of the promised energy savings unrealized.

AI could be a game-changer in bridging the gap between ambition and execution. With AI-driven controls, hyperscalers could manage energy use dynamically, preventing inefficiencies before they occur. Yet, many hyperscalers are not using AI to its full potential, continuing to rely on outdated systems that limit real-time optimization.

PUE – A Useful, But Incomplete Metric

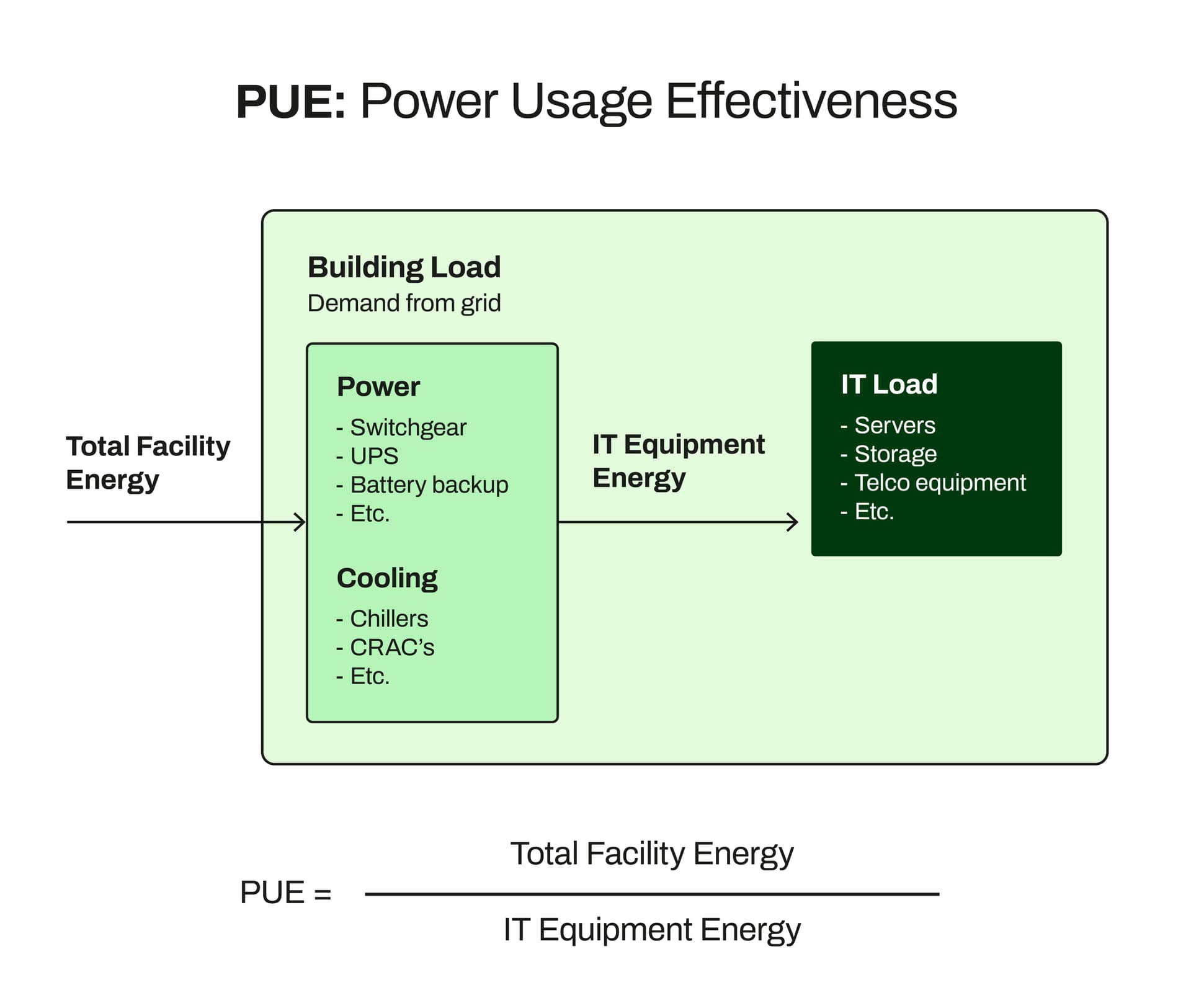

Power Usage Effectiveness (PUE) has long been the gold standard for measuring data center energy efficiency. Introduced in the early 2000s, PUE calculates the ratio of total energy consumed by a data center to the energy used by its IT equipment. The closer the PUE value is to 1.0, the more energy-efficient the facility.

Figure 1: A chart explaining how PUE, Power Usage Effectiveness, is measured by comparing a data center's total energy consumption to the IT equipment energy consumption

As an example, if a data center is promoting a 20MW IT load capacity and runs the facility at 1.5 PUE, then the total maximum power this data center consumes is 30MW. This means 10MW of the energy is used for cooling, lighting, and other infrastructure needs when the data center is operating at 100% IT load capacity.

PUE offers a simple, comparable measure of performance across facilities, making it an important baseline for the industry. For years, it has provided a comparable measure of performance which urged data centers to find more efficient ways to optimize energy use.

However, while PUE is an essential starting point, it doesn’t tell the whole story. PUE captures only the energy dynamics inside the data center, primarily focusing on IT and mechanical power. As data centers adapt to industrial changes, there’s a growing recognition that factors, like water usage and carbon intensity, play a significant role in sustainability.

A Need for Additional Metrics Beyond PUE

PUE does account for innovations that directly impact energy consumption, such as AI-driven systems that optimize energy usage in real-time and liquid cooling that significantly reduces cooling energy. Yet, it doesn’t consider:

Water Usage: Some data centers have come under scrutiny on their water usage because they are in what is considered to be “water-scarce areas” but this will become a more important metric even in non-water-scarce areas as the amount of evaporative cooling needed for larger and more dense data centers continues to grow.

Energy Source: PUE doesn’t differentiate between renewable and non-renewable energy sources, which is critical for assessing carbon footprints.

Embedded Carbon: The building materials, equipment and transport needed to go from an empty plot of land to a fully functioning data center are not counted in the carbon footprint of the industry in any meaningful way just yet.

This focus on energy efficiency often overlooks these broader elements of a data center’s environmental impact. Additional metrics give a complete understanding with comprehensive measures. These metrics are:

Water Usage Efficiency (WUE): With the rise of liquid cooling systems and larger evaporative cooling data centers, measuring water consumption alongside energy efficiency is crucial. WUE helps track how much water a facility uses per unit of energy, reflecting its water sustainability practices.

Operational Lifecycle Costs: Metrics that factor in equipment lifespan, maintenance, and energy use provide a more holistic view of a data center's environmental and economic sustainability.

Carbon Intensity: This metric assesses carbon emissions related to the upstream supply chain for all materials, equipment and transportation used to construct the data center.

PUE Is A Strong Foundation, Not the Whole Solution

PUE serves the industry well and continues to be a valuable benchmark. Yet, as data centers grow more complex and sustainability goals become more ambitious, it’s clear that we need a broader set of metrics. By tracking water usage, lifecycle costs, and carbon intensity alongside PUE, hyperscalers can gain a more complete view of their environmental impact.

In short, PUE is a great starting point, but the industry must go further, leveraging additional metrics to truly measure and improve sustainability.

Discrepancies Between Sustainability Goals and Operational Reality

Hyperscalers have made bold sustainability commitments, but operational realities often tell a different story. While their goals for net-zero emissions and renewable energy adoption are ambitious, achieving them is complicated by inefficiencies and inconsistencies.

A major challenge is their reliance on static control systems, which react after demand spikes instead of adjusting in real-time. This creates inefficiencies, especially when hyperscalers hold colocation providers to stricter standards than they apply to their own facilities. For example, colocation partners are often required to meet stringent Power Usage Effectiveness (PUE) caps that hyperscalers themselves may not achieve.

Scope 3 emissions—those indirect emissions that occur in a hyperscaler’s supply chain—pose another issue. While hyperscalers aren’t directly responsible for the energy consumption of colocation providers, they have committed to reducing these emissions. This means they need their colocation partners to be more efficient. Achieving these reductions and closing the gap between sustainability goals and actual performance becomes increasingly challenging without dynamic, AI-driven controls, and better coordination.

Although hyperscalers like Google and Amazon have made strides in sustainability, such as upgrading to highly efficient equipment, recommissioning control programming to further optimize and investing heavily in renewable energy, misalignments with colocation providers persist.

Hyperscalers often enforce strict PUE caps on colocation partners, requiring them to maintain a PUE of 1.40 or lower to secure contracts. However, many colos may actually operate at a PUE closer to 1.45 or higher.

In these cases, colocation providers absorb the extra energy costs (and the related emissions) to meet the hyperscaler's requirements. This allows hyperscalers to claim they have a lower global PUE across their value chain without fully reflecting the reality of their colocation providers.

Moreover, hyperscalers often demand more conservative temperature controls in colocation facilities. A hyperscaler might run its own data halls at a maximum hot aisle temperature of 84°F but contract that colocation partners maintain a cooler 75°F. This demand forces colocation providers to consume more energy for cooling, undermining overall efficiency and sustainability goals.

This dual approach leads to fragmented sustainability efforts. Hyperscalers cap the PUE they’re willing to pay for while mandating more energy-intensive practices in colocation facilities. This contradicts their promotion of stringent efficiency standards, as the practices they mandate often drive inefficiencies elsewhere.

Additionally, there is little to no communication when IT load is expected to increase at a particular facility. This leaves collocation providers reacting to IT load spikes rather than proactively preparing for them—a typically more efficient way of handling the cooling needs.

For example, a colocation provider may be contractually required to hit a PUE of 1.35, which the hyperscaler then includes in its reported sustainability metrics. In reality, the colocation provider may operate at a higher PUE, such as 1.45, absorbing the additional costs to avoid breaching the contract. This practice allows hyperscalers to base their efficiency claims on contracted targets, ignoring the true energy consumption within their supply chain.

This lack of transparency creates friction across the data center ecosystem. Static systems prevent hyperscalers from fully realizing energy savings, while colocation partners bear the burden of unrealistic demands. This hampers sustainability progress and risks damaging hyperscalers' credibility as environmental leaders.

Why It Matters

Hyperscalers influence the global data center industry, and when they fail to meet their own standards, it weakens the broader sustainability movement. Every inefficiency adds to energy waste, emissions, and missed opportunities for improvement.

To maintain public trust and industry leadership, hyperscalers must align their operational practices with their sustainability promises. As regulatory scrutiny tightens and public expectations grow, bridging this gap is critical for their long-term success.

Innovations in Energy Efficiency and Sustainability

Hyperscalers are at the forefront of energy efficiency through advanced cooling systems, AI-powered automation, and renewable energy integration, closing the gap between sustainability goals and real-world operations.

Cooling is a major challenge for hyperscalers, but immersion and liquid cooling are helping to significantly reduce energy use.

Immersion cooling submerges hardware in liquids to dissipate heat more efficiently than traditional air-cooling methods.

Liquid cooling circulates coolant directly around the hottest components, minimizing wasted energy.

AI-empowered anticipatory cooling takes things further. It anticipates and adjusts cooling needs before temperature spikes occur. This ensures cooling systems activate only when needed, reducing unnecessary energy consumption. Moreover, AI can target location-specific cooling, focusing on particular sections of the data hall where IT load is ramping up, rather than cooling the entire space. This precision reduces unnecessary energy consumption and optimizes overall efficiency.

AI is becoming a game-changer in data center management. By dynamically adjusting cooling, balancing workloads, and anticipating energy spikes, AI optimizes data center performance in real-time.

Think of it like "cruise control" for data centers. AI would automatically make adjustments based on demand before problems arise. Like a smart thermostat, it senses when a room's temperature is rising and takes corrective action in a precise place before discomfort even registers.

AI Readiness Checklist: Operational Data Collection & Storage Best Practices

Download our checklist to improve your facility’s data habits. Whether you are preparing for an AI solution or not, these will help increase the value of your data collection strategies.

While data centers are reaping the benefits of the AI boom, many have yet to fully embrace AI for enhancing their operational efficiency. This gap leaves significant room for improvement in energy optimization and sustainability.

Hyperscalers, however, are investing in energy-efficient hardware to cut down on power consumption. Their servers and modular data centers are designed to perform at high levels while being light on energy usage, helping to reduce energy footprints while boosting performance.

Perhaps one of the most transformative steps hyperscalers are taking is their commitment to renewable energy. Amazon and Google are among the largest corporate buyers of renewable energy, with Google matching 100% of its energy usage to renewables since 2017. Some hyperscalers are beginning to design in on-site energy storage or flexibility to use renewable energy more effectively. They also are contracting even more new production of solar and wind farms, near their data centers. This helps reduce their reliance on the grid while offering long-term energy cost control.

By taking control of their own green energy production, hyperscalers are setting new sustainability benchmarks and working toward meeting their ambitious goals as energy demands continue to rise.

Environmental Impact and Mitigation Efforts

Hyperscalers and their data centers come with a heavy environmental cost. In 2022, data centers consumed an estimated 460 terawatt-hours (TWh) of electricity, accounting for nearly 2% of global electricity use, a figure set to double by 2026. Despite significant renewable energy commitments, hyperscalers still face challenges in reducing their carbon footprints, especially when it comes to managing fluctuating energy demands.

So, the question remains: Is it enough? While renewable energy helps offset some emissions, the sheer power demands of these data centers often outpace available green resources. Simply purchasing renewable energy credits doesn’t fully address the immediate impact of their operations, especially in regions where non-renewable energy remains dominant.

Hyperscalers have made strides, but inefficiencies persist. Many data centers still rely on static control systems, missing out on potential energy savings. Additionally, Scope 3 emissions—indirect emissions from their supply chain—are difficult to track accurately due to contracting issues and therefore hard to mitigate, complicating sustainability efforts further.

The Room for Improvement

AI has the potential to forecast energy spikes, adjust cooling systems before problems arise, and optimize server workloads for greater energy efficiency. But the adoption has been slow and uneven. Many hyperscalers are still relying on outdated systems that aren’t designed to optimize energy use dynamically.

Real-time dynamic control could be the key to unlocking the full potential of their sustainability initiatives.

The gap between hyperscalers’ sustainability goals and their current operations undermines their green pledges and erodes public trust. As regulatory scrutiny grows, hyperscalers must prove their progress to avoid tighter regulations and potential penalties. Leading the charge with AI-driven systems and transparent communication could set a new sustainability standard across the industry.

The Influence of Regulatory and Public Pressure

As hyperscalers expand, they face growing regulatory scrutiny, especially in regions like the European Union (EU). The EU's new reporting requirements mandate data centers to disclose energy efficiency, renewable energy usage, and water consumption, aiming to cut energy use by 11.7% by 2030. These regulations push hyperscalers toward greater transparency, especially around complex environmental issues.

The EU often sets the standard for global regulations, meaning companies must adapt as similar demands emerge worldwide. Failure to meet these expectations could result in operational challenges and reputational damage.

As public awareness of data centers’ environmental impact grows, so too does the demand for accountability. Both regulators and consumers are seeking real proof of sustainable operations—not just promises.

In addition to regulatory pressure, public expectations are becoming a driving force. While companies are quick to market their eco-friendly credentials, this positioning carries risks. Over-promising and under-delivering on sustainability goals leaves them vulnerable to accusations of greenwashing.

Public trust is fragile, and increasingly more important as data center colocation providers and hyperscalers are looking for more land and more power and must deal with more and more community stakeholders concerned about their presence in or near their community. Any perception that overstate green efforts could result in lost business and damaged reputations.

Despite these challenges, hyperscalers are in a unique position to drive sustainability standards across the data center industry. With their scale, they influence colocation partners and suppliers, pushing for best practices. However, they must ensure that their internal operations meet the same high standards they demand from others. As regulatory pressure and public scrutiny increase, hyperscalers have a chance to lead by example and drive meaningful sustainability progress.

What Comes Next?

Hyperscalers are undeniably shaping the future of the digital world. Their bold sustainability commitments, from net-zero emissions to renewable energy targets, lead the charge in how data centers should operate. While innovations in AI, advanced cooling, and renewable energy are helping to bridge the gap, static control systems and simplified metrics like PUE limit the full potential of these efforts.

The future of sustainability in data centers depends on adopting more comprehensive metrics that go beyond energy efficiency. AI and automation will be pivotal in driving real-time optimization, allowing hyperscalers to dynamically manage their resources, reduce carbon footprints, and meet their ambitious goals.

As hyperscalers continue to grow, holding them accountable to their green promises is critical. By embracing emerging technologies and more holistic approaches, they can truly deliver on their sustainability goals and set a new standard for the industry.

Featured Expert

Learn more about one of our subject matter experts interviewed for this post

Mike Sweeney

VP, Corporate Development

As a member of the Leadership team at Phaidra, Mike serves as the VP of Corporate Development. He's responsible for creating and executing strategies that leverage our industrial AI solutions to optimize the performance and resiliency of industrial facilities. Prior to Phaidra, Mike was involved in some of the most groundbreaking projects in the data center industry, such as designing and delivering data centers for Microsoft and Salesforce, leading the hyperscale growth of Silent-Aire as CIO, and developing world class server solutions at Dell Technologies. He's here to help Phaidra achieve its mission of transforming the physical world with self-learning, intelligent control systems.

Share

Recent Posts

Research | December 03, 2025

What kind of data is needed to safely operationalize predictive AI control? Building on a previous blog, class 3 and 4 data provide the context and simulated future states that power real-world autonomy in industrial AI control systems.

AI

AI | November 04, 2025

Phaidra joins NVIDIA’s Omniverse DSX Blueprint to define the future of energy-efficient, gigawatt-scale AI infrastructure.

Setup | October 06, 2025

AI system outputs can only be as good as the quality of the data inputs. AI systems can fail when data is mislabeled, messy or misleading. Learn about data classifications and what can be done at each stage to get your data ready for AI

Subscribe to our blog

Stay connected with our insightful systems control and AI content.

You can unsubscribe at any time. For more details, review our Privacy Policy page.